class mrpt::obs::CObservation3DRangeScan

Overview

A depth or RGB+D image from a time-of-flight or structured-light sensor.

This kind of observations can carry one or more of these data fields:

3D point cloud (as {x,y,z}

floatvectors).Each 3D point has its associated

(u,v)pixel coordinates in points3D_idxs_x & points3D_idxs_y (New in MRPT 1.4.0)2D range image (as a matrix): Each entry in the matrix

rangeImage(ROW,COLUMN)contains a distance or a depth, depending on range_is_depth. Ranges are stored as uint16_t for efficiency. The units of ranges are stored separately inrangeUnits.2D intensity (grayscale or RGB) image (as a mrpt::img::CImage).

2D confidence image (as a mrpt::img::CImage): For each pixel, a 0x00 and a 0xFF mean the lowest and highest confidence levels, respectively.

Semantic labels: Stored as a matrix of bitfields, each bit having a user-defined meaning.

For cameras supporting multiple returns per pixels, different layers of range images are available in the map rangeImageOtherLayers.

The coordinates of the 3D point cloud are in meters with respect to the depth camera origin of coordinates, with the +X axis pointing forward, +Y pointing left-hand and +Z pointing up. By convention, a 3D point with its coordinates set to (0,0,0), will be considered as invalid.

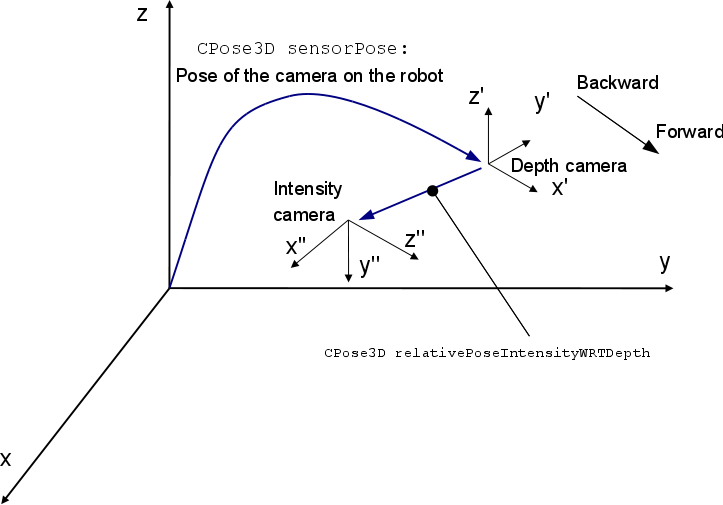

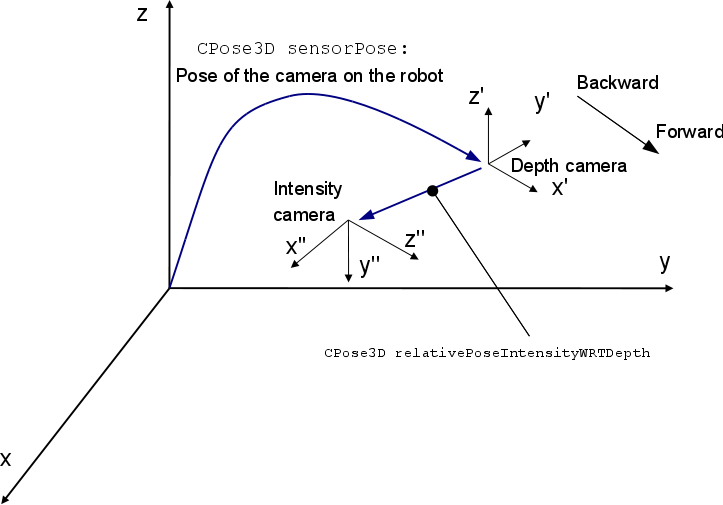

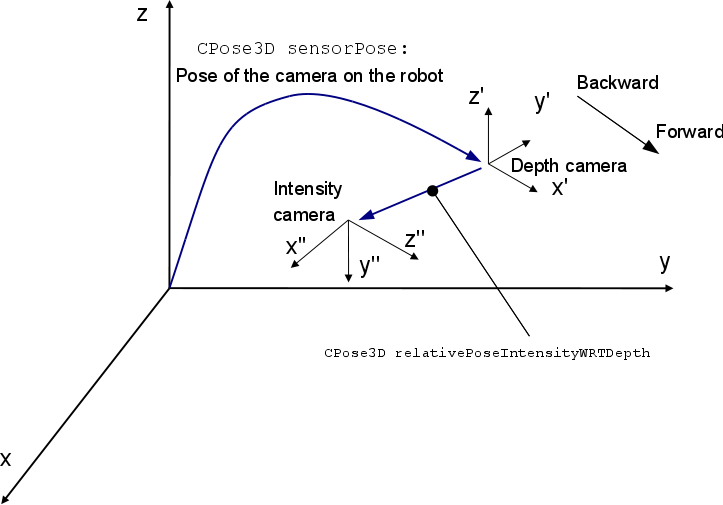

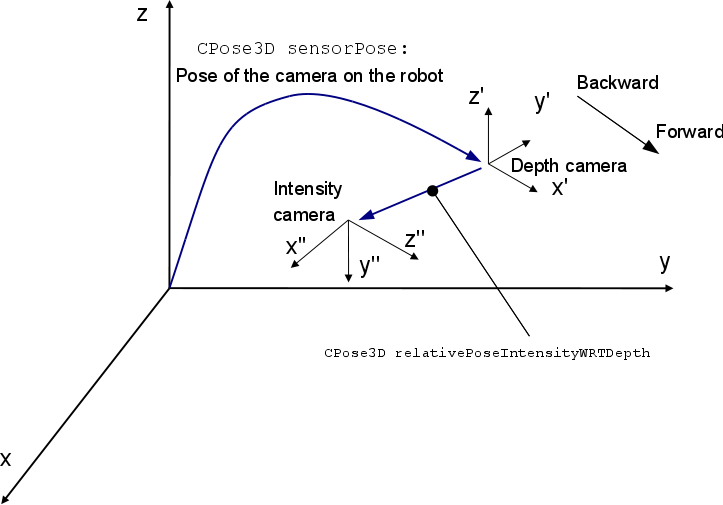

The field CObservation3DRangeScan::relativePoseIntensityWRTDepth describes the change of coordinates from the depth camera to the intensity (RGB or grayscale) camera. In some cameras both cameras coincide, so this pose would be just a rotation (0,0,0,-90deg,0,-90deg). In Kinect-like cameras there is also an offset, as shown in this figure:

In any case, check the field relativePoseIntensityWRTDepth, or the method doDepthAndIntensityCamerasCoincide() to determine if both frames of reference coincide, since even for Kinect cameras both can coincide if the images have been rectified.

The 2D images and matrices are stored as common images, with an up->down rows order and left->right, as usual. Optionally, the intensity and confidence channels can be set to delayed-load images for off-rawlog storage so it saves memory by having loaded in memory just the needed images. See the methods load() and unload(). Due to the intensive storage requirements of this kind of observations, this observation is the only one in MRPT for which it’s recommended to always call “load()” and “unload()” before and after using the observation, ONLY when the observation was read from a rawlog dataset, in order to make sure that all the externally stored data fields are loaded and ready in memory.

Some classes that grab observations of this type are:

There are two sets of calibration parameters (see mrpt::vision::checkerBoardStereoCalibration() or the ready-to-use GUI program kinect-calibrate :

cameraParams: Intrinsics of the depth camera.

cameraParamsIntensity: Intrinsics of the intensity (RGB) camera.

In some cameras, like SwissRanger, both are the same. It is possible in Kinect to rectify the range images such both cameras seem to coincide and then both sets of camera parameters will be identical.

Range data can be interpreted in two different ways depending on the 3D camera (this field is already set to the correct setting when grabbing observations from an mrpt::hwdrivers sensor):

range_is_depth==true: Kinect-like ranges: entries of rangeImage are distances along the +X (front-facing) axis.range_is_depth==false: Ranges in rangeImage are actual distances in 3D, i.e. a bit larger than the depth.

The intensity channel may come from different channels in sensors as Kinect. Look at field intensityImageChannel to find out if the image was grabbed from the visible (RGB) or IR channels.

3D point clouds can be generated at any moment after grabbing with CObservation3DRangeScan::unprojectInto(), provided the correct calibration parameters. Note that unprojectInto() will store the point cloud in sensor-centric local coordinates by default, but changing its parameters you can obtain a vehicle-centric, or world-frame point cloud instead.

Examples of how to assign labels to pixels (for object segmentation, semantic information, etc.):

// Assume obs of type CObservation3DRangeScan::Ptr obs->pixelLabels =TPixelLabelInfo::Ptr( new *CObservation3DRangeScan::TPixelLabelInfo<NUM_BYTES>() ); obs->pixelLabels->setSize(ROWS,COLS); obs->pixelLabels->setLabel(col,row, label_idx); // label_idxs = [0,2^NUM_BYTES-1] //...

Since MRPT 1.5.0, external files format can be selected at runtime with CObservation3DRangeScan::EXTERNALS_AS_TEXT

See also:

mrpt::hwdrivers::CSwissRanger3DCamera, mrpt::hwdrivers::CKinect, mrpt::hwdrivers::COpenNI2Sensor, mrpt::obs::CObservation

#include <mrpt/obs/CObservation3DRangeScan.h> class CObservation3DRangeScan: public mrpt::obs::CObservation { public: // enums enum TIntensityChannelID; // structs struct unproject_LUT_t; // fields bool hasIntensityImage {false}; mrpt::img::CImage intensityImage; TIntensityChannelID intensityImageChannel {CH_VISIBLE}; bool hasPoints3D {false}; std::vector<float> points3D_x; std::vector<float> points3D_y; std::vector<float> points3D_z; std::vector<uint16_t> points3D_idxs_x; std::vector<uint16_t> points3D_idxs_y; bool hasRangeImage {false}; mrpt::math::CMatrix_u16 rangeImage; std::map<std::string, mrpt::math::CMatrix_u16> rangeImageOtherLayers; float rangeUnits = 0.001f; bool range_is_depth {true}; bool hasConfidenceImage {false}; mrpt::img::CImage confidenceImage; TPixelLabelInfoBase::Ptr pixelLabels; mrpt::img::TCamera cameraParams; mrpt::img::TCamera cameraParamsIntensity; mrpt::poses::CPose3D relativePoseIntensityWRTDepth = {0, 0, 0, -90.0_deg, 0.0_deg, -90.0_deg}; float maxRange {5.0f}; mrpt::poses::CPose3D sensorPose; float stdError {0.01f}; // construction CObservation3DRangeScan(); // methods void resizePoints3DVectors(size_t nPoints); size_t getScanSize() const; void rangeImage_setSize(const int HEIGHT, const int WIDTH); mrpt::img::CImage rangeImage_getAsImage( const std::optional<mrpt::img::TColormap> color = std::nullopt, const std::optional<float> normMinRange = std::nullopt, const std::optional<float> normMaxRange = std::nullopt, const std::optional<std::string> additionalLayerName = std::nullopt ) const; static mrpt::img::CImage rangeImageAsImage( const mrpt::math::CMatrix_u16& ranges, float val_min, float val_max, float rangeUnits, const std::optional<mrpt::img::TColormap> color = std::nullopt ); bool hasPixelLabels() const; bool doDepthAndIntensityCamerasCoincide() const; virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const; virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose); virtual void load_impl() const; virtual void unload() const; bool points3D_isExternallyStored() const; std::string points3D_getExternalStorageFile() const; void points3D_getExternalStorageFileAbsolutePath(std::string& out_path) const; std::string points3D_getExternalStorageFileAbsolutePath() const; void points3D_convertToExternalStorage(const std::string& fileName, const std::string& use_this_base_dir); void points3D_overrideExternalStoredFlag(bool isExternal); bool rangeImage_isExternallyStored() const; std::string rangeImage_getExternalStorageFile(const std::string& rangeImageLayer) const; void rangeImage_getExternalStorageFileAbsolutePath(std::string& out_path, const std::string& rangeImageLayer) const; std::string rangeImage_getExternalStorageFileAbsolutePath(const std::string& rangeImageLayer) const; void rangeImage_convertToExternalStorage(const std::string& fileName, const std::string& use_this_base_dir); void rangeImage_forceResetExternalStorage(); template <class POINTMAP> void unprojectInto( POINTMAP& dest_pointcloud, const T3DPointsProjectionParams& projectParams = T3DPointsProjectionParams(), const TRangeImageFilterParams& filterParams = TRangeImageFilterParams() ); void convertTo2DScan(mrpt::obs::CObservation2DRangeScan& out_scan2d, const T3DPointsTo2DScanParams& scanParams, const TRangeImageFilterParams& filterParams = TRangeImageFilterParams()); void undistort(); virtual void getDescriptionAsText(std::ostream& o) const; void swap(CObservation3DRangeScan& o); void getZoneAsObs( CObservation3DRangeScan& obs, const unsigned int& r1, const unsigned int& r2, const unsigned int& c1, const unsigned int& c2 ); const unproject_LUT_t& get_unproj_lut() const; static void EXTERNALS_AS_TEXT(bool value); static bool EXTERNALS_AS_TEXT(); static double recoverCameraCalibrationParameters( const CObservation3DRangeScan& in_obs, mrpt::img::TCamera& out_camParams, const double camera_offset = 0.01 ); };

Inherited Members

public: // fields mrpt::system::TTimeStamp timestamp {mrpt::Clock::now()}; std::string sensorLabel; // methods mrpt::system::TTimeStamp getTimeStamp() const; virtual mrpt::system::TTimeStamp getOriginalReceivedTimeStamp() const; void load() const; virtual void unload() const; virtual bool exportTxtSupported() const; virtual std::string exportTxtHeader() const; virtual std::string exportTxtDataRow() const; template <class METRICMAP> bool insertObservationInto( METRICMAP& theMap, const std::optional<const mrpt::poses::CPose3D>& robotPose = std::nullopt ) const; virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const = 0; void getSensorPose(mrpt::math::TPose3D& out_sensorPose) const; mrpt::math::TPose3D sensorPose() const; virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose) = 0; void setSensorPose(const mrpt::math::TPose3D& newSensorPose); virtual void getDescriptionAsText(std::ostream& o) const; virtual std::string asString() const;

Fields

bool hasIntensityImage {false}

true means the field intensityImage contains valid data

mrpt::img::CImage intensityImage

If hasIntensityImage=true, a color or gray-level intensity image of the same size than “rangeImage”.

TIntensityChannelID intensityImageChannel {CH_VISIBLE}

The source of the intensityImage; typically the visible channel.

See also:

bool hasPoints3D {false}

true means the field points3D contains valid data.

std::vector<float> points3D_x

If hasPoints3D=true, the (X,Y,Z) coordinates of the 3D point cloud detected by the camera.

See also:

std::vector<uint16_t> points3D_idxs_x

If hasPoints3D=true, the (x,y) pixel coordinates for each (X,Y,Z) point in points3D_x, points3D_y, points3D_z.

bool hasRangeImage {false}

true means the field rangeImage contains valid data

mrpt::math::CMatrix_u16 rangeImage

If hasRangeImage==true, rangeImage(r,c) is a matrix of depth values as captured by the camera, with r the row index and c column index.

Matrix element are integers for efficiency of post-processing filters, etc. Zero means no return (i.e. invalid range, no return). To obtain range/depth in meters, multiply this matrix by rangeUnits.

For sensors with more than one return per ray, this matrix holds the range for the “STRONGEST” (normally the closest) return.

See also:

rangeUnits, range_is_depth, rangeUnits, hasRangeImage, rangeImageOtherLayers

std::map<std::string, mrpt::math::CMatrix_u16> rangeImageOtherLayers

Additional layer range/depth images.

Text labels are arbitrary and sensor-dependent, e.g. “LAST”, “SECOND”, “3rd”, etc.

float rangeUnits = 0.001f

The conversion factor from integer units in rangeImage and actual distances in meters.

Default is 0.001 m, that is 1 millimeter.

See also:

bool range_is_depth {true}

true: Kinect-like ranges: entries of rangeImage are distances along the +X axis (forward); false: Ranges in rangeImage are actual distances in 3D.

bool hasConfidenceImage {false}

true means the field confidenceImage contains valid data

mrpt::img::CImage confidenceImage

If hasConfidenceImage=true, an image with the “confidence” value [range 0-255] as estimated by the capture drivers.

TPixelLabelInfoBase::Ptr pixelLabels

All information about pixel labeling is stored in this (smart pointer to) structure; refer to TPixelLabelInfo for details on the contents User is responsible of creating a new object of the desired data type.

It will be automatically (de)serialized no matter its specific type.

mrpt::img::TCamera cameraParams

Projection parameters of the depth camera.

mrpt::img::TCamera cameraParamsIntensity

Projection parameters of the intensity (graylevel or RGB) camera.

mrpt::poses::CPose3D relativePoseIntensityWRTDepth = {0, 0, 0, -90.0_deg, 0.0_deg, -90.0_deg}

Relative pose of the intensity camera wrt the depth camera (which is the coordinates origin for this observation).

In a SwissRanger camera, this will be (0,0,0,-90deg,0,-90deg) since the location of both cameras coincide and there is only a rotation due to the different axes conventions (see picture above). In a Kinect, this will include a small lateral displacement and a rotation, according to the drawing on the top of this page.

See also:

doDepthAndIntensityCamerasCoincide

float maxRange {5.0f}

The maximum range allowed by the device, in meters (e.g.

8.0m, 5.0m,…)

mrpt::poses::CPose3D sensorPose

The 6D pose of the sensor on the robot.

float stdError {0.01f}

The “sigma” error of the device in meters, used while inserting the scan in an occupancy grid.

Methods

void resizePoints3DVectors(size_t nPoints)

Use this method instead of resizing all three points3D_x, points3D_y & points3D_z to allow the usage of the internal memory pool.

size_t getScanSize() const

Get the size of the scan pointcloud.

Method is added for compatibility with its CObservation2DRangeScan counterpart

void rangeImage_setSize(const int HEIGHT, const int WIDTH)

Similar to calling “rangeImage.setSize(H,W)” but this method provides memory pooling to speed-up the memory allocation.

mrpt::img::CImage rangeImage_getAsImage( const std::optional<mrpt::img::TColormap> color = std::nullopt, const std::optional<float> normMinRange = std::nullopt, const std::optional<float> normMaxRange = std::nullopt, const std::optional<std::string> additionalLayerName = std::nullopt ) const

Builds a visualization from the rangeImage.

The image is built with the given color map (default: grayscale) and such that the colormap range is mapped to ranges 0 meters to the field “maxRange” in this object, unless overridden with the optional parameters. Note that the usage of optional<> allows any parameter to be left to its default placing std::nullopt.

Parameters:

additionalLayerName |

If empty string or not provided, the main rangeImage will be used; otherwise, the given range image layer. |

See also:

static mrpt::img::CImage rangeImageAsImage( const mrpt::math::CMatrix_u16& ranges, float val_min, float val_max, float rangeUnits, const std::optional<mrpt::img::TColormap> color = std::nullopt )

Static method to convert a range matrix into an image.

If val_max is left to zero, the maximum range in the matrix will be automatically used.

See also:

bool hasPixelLabels() const

Returns true if the field CObservation3DRangeScan::pixelLabels contains a non-NULL smart pointer.

To enhance a 3D point cloud with labeling info, just assign an appropriate object to pixelLabels

bool doDepthAndIntensityCamerasCoincide() const

Return true if relativePoseIntensityWRTDepth equals the pure rotation (0,0,0,-90deg,0,-90deg) (with a small comparison epsilon)

See also:

virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const

A general method to retrieve the sensor pose on the robot.

Note that most sensors will return a full (6D) CPose3D, but see the derived classes for more details or special cases.

See also:

virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose)

A general method to change the sensor pose on the robot.

Note that most sensors will use the full (6D) CPose3D, but see the derived classes for more details or special cases.

See also:

virtual void load_impl() const

Makes sure all images and other fields which may be externally stored are loaded in memory.

Note that for all CImages, calling load() is not required since the images will be automatically loaded upon first access, so load() shouldn’t be needed to be called in normal cases by the user. If all the data were alredy loaded or this object has no externally stored data fields, calling this method has no effects.

See also:

virtual void unload() const

Unload all images, for the case they being delayed-load images stored in external files (otherwise, has no effect).

See also:

void points3D_convertToExternalStorage( const std::string& fileName, const std::string& use_this_base_dir )

Users won’t normally want to call this, it’s only used from internal MRPT programs.

See also:

void points3D_overrideExternalStoredFlag(bool isExternal)

Users normally won’t need to use this.

void rangeImage_getExternalStorageFileAbsolutePath( std::string& out_path, const std::string& rangeImageLayer ) const

rangeImageLayer: Empty for the main rangeImage matrix, otherwise, a key of rangeImageOtherLayers

void rangeImage_convertToExternalStorage( const std::string& fileName, const std::string& use_this_base_dir )

Users won’t normally want to call this, it’s only used from internal MRPT programs.

See also:

void rangeImage_forceResetExternalStorage()

Forces marking this observation as non-externally stored - it doesn’t anything else apart from resetting the corresponding flag (Users won’t normally want to call this, it’s only used from internal MRPT programs)

template <class POINTMAP> void unprojectInto( POINTMAP& dest_pointcloud, const T3DPointsProjectionParams& projectParams = T3DPointsProjectionParams(), const TRangeImageFilterParams& filterParams = TRangeImageFilterParams() )

Unprojects the RGB+D image pair into a 3D point cloud (with color if the target map supports it) and optionally at a given 3D pose.

The 3D point coordinates are computed from the depth image (rangeImage) and the depth camera camera parameters (cameraParams). There exist two set of formulas for projecting the i’th point, depending on the value of “range_is_depth”. In all formulas below, “rangeImage” is the matrix of ranges and the pixel coordinates are (r,c).

1) [range_is_depth=true] With “range equals depth” or “Kinect-like depth mode”: the range values are in fact distances along the “+X” axis, not real 3D ranges (this is the way Kinect reports ranges):

x(i) = rangeImage(r,c) * rangeUnits y(i) = (r_cx - c) * x(i) / r_fx z(i) = (r_cy - r) * x(i) / r_fy

[range_is_depth=false] With “normal ranges”: range means distance in 3D. This must be set when processing data from the SwissRange 3D camera, among others.

Ky = (r_cx - c)/r_fx Kz = (r_cy - r)/r_fy x(i) = rangeImage(r,c) * rangeUnits / sqrt( 1 + Ky^2 + Kz^2 ) y(i) = Ky * x(i) z(i) = Kz * x(i)

The color of each point is determined by projecting the 3D local point into the RGB image using cameraParamsIntensity.

By default the local (sensor-centric) coordinates of points are directly stored into the local map, but if indicated so in takeIntoAccountSensorPoseOnRobot the points are transformed with sensorPose. Furthermore, if provided, those coordinates are transformed with robotPoseInTheWorld

For multi-return sensors, only the layer specified in T3DPointsProjectionParams::layer will be unprojected.

Parameters:

POINTMAP |

Supported maps are all those covered by mrpt::opengl::PointCloudAdapter (mrpt::maps::CPointsMap and derived, mrpt::opengl::CPointCloudColoured, PCL point clouds,…) |

void convertTo2DScan( mrpt::obs::CObservation2DRangeScan& out_scan2d, const T3DPointsTo2DScanParams& scanParams, const TRangeImageFilterParams& filterParams = TRangeImageFilterParams() )

Convert this 3D observation into an “equivalent 2D fake laser scan”, with a configurable vertical FOV.

The result is a 2D laser scan with more “rays” (N) than columns has the 3D observation (W), exactly: N = W * oversampling_ratio. This oversampling is required since laser scans sample the space at evenly-separated angles, while a range camera follows a tangent-like distribution. By oversampling we make sure we don’t leave “gaps” unseen by the virtual “2D laser”.

All obstacles within a frustum are considered and the minimum distance is kept in each direction. The horizontal FOV of the frustum is automatically computed from the intrinsic parameters, but the vertical FOV must be provided by the user, and can be set to be assymetric which may be useful depending on the zone of interest where to look for obstacles.

All spatial transformations are riguorosly taken into account in this class, using the depth camera intrinsic calibration parameters.

The timestamp of the new object is copied from the 3D object. Obviously, a requisite for calling this method is the 3D observation having range data, i.e. hasRangeImage must be true. It’s not needed to have RGB data nor the raw 3D point clouds for this method to work.

If scanParams.use_origin_sensor_pose is true, the points will be projected to 3D and then reprojected as seen from a different sensorPose at the vehicle frame origin. Otherwise (the default), the output 2D observation will share the sensorPose of the input 3D scan (using a more efficient algorithm that avoids trigonometric functions).

Parameters:

out_scan2d |

The resulting 2D equivalent scan. |

See also:

The example in https://www.mrpt.org/tutorials/mrpt-examples/example-kinect-to-2d-laser-demo/

void undistort()

Removes the distortion in both, depth and intensity images.

Intrinsics (fx,fy,cx,cy) remains the same for each image after undistortion.

virtual void getDescriptionAsText(std::ostream& o) const

Build a detailed, multi-line textual description of the observation contents and dump it to the output stream.

If overried by derived classes, call base CObservation::getDescriptionAsText() first to show common information.

This is the text that appears in RawLogViewer when selecting an object in the dataset

void swap(CObservation3DRangeScan& o)

Very efficient method to swap the contents of two observations.

void getZoneAsObs( CObservation3DRangeScan& obs, const unsigned int& r1, const unsigned int& r2, const unsigned int& c1, const unsigned int& c2 )

Extract a ROI of the 3D observation as a new one.

PixelLabels are not copied to the output subimage.

const unproject_LUT_t& get_unproj_lut() const

Gets (or generates upon first request) the 3D point cloud projection look-up-table for the current depth camera intrinsics & distortion parameters.

Returns a const reference to a global variable. Multithread safe.

See also:

static void EXTERNALS_AS_TEXT(bool value)

Whether external files (3D points, range and confidence) are to be saved as .txt text files (MATLAB compatible) or *.bin binary (faster).

Loading always will determine the type by inspecting the file extension. Default=false

static double recoverCameraCalibrationParameters( const CObservation3DRangeScan& in_obs, mrpt::img::TCamera& out_camParams, const double camera_offset = 0.01 )

A Levenberg-Marquart-based optimizer to recover the calibration parameters of a 3D camera given a range (depth) image and the corresponding 3D point cloud.

Parameters:

camera_offset |

The offset (in meters) in the +X direction of the point cloud. |

camera_offset |

The offset (in meters) in the +X direction of the point cloud. It’s 1cm for SwissRanger SR4000. |

Returns:

The final average reprojection error per pixel (typ <0.05 px)

The final average reprojection error per pixel (typ <0.05 px)