class mrpt::obs::CObservationVelodyneScan

A CObservation -derived class for RAW DATA (and optionally, point cloud) of scans from 3D Velodyne LIDAR scanners.

A scan comprises one or more “velodyne packets” (refer to Velodyne user manual). Normally, a full 360 degrees sweep is included in one observation object. Note that if a pointcloud is generated inside this class, each point is annotated with per-point information about its exact azimuth and laser_id (ring number), an information that is loss when inserting the observation in a regular mrpt::maps::CPointsMap.

Main data fields:

CObservationVelodyneScan::scan_packets with raw data packets.

CObservationVelodyneScan::point_cloud normally empty after grabbing for efficiency, can be generated calling CObservationVelodyneScan::generatePointCloud()

Dual return mode is supported (see mrpt::hwdrivers::CVelodyneScanner).

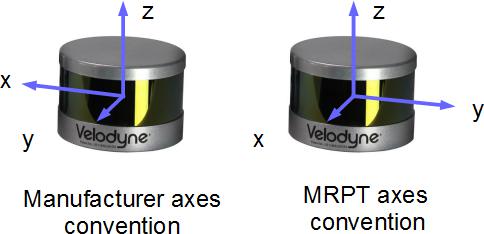

Axes convention for point cloud (x,y,z) coordinates:

If it can be assumed that the sensor moves SLOWLY through the environment (i.e. its pose can be approximated to be the same since the beginning to the end of one complete scan) then this observation can be converted / loaded into the following other classes:

Maps of points (these require first generating the pointcloud in this observation object with mrpt::obs::CObservationVelodyneScan::generatePointCloud()):

mrpt::maps::CPointsMap::loadFromVelodyneScan() (available in all derived classes)

and the generic method:mrpt:: maps::CPointsMap::insertObservation()

mrpt::opengl::CPointCloud and mrpt::opengl::CPointCloudColoured is supported by first converting this scan to a mrpt::maps::CPointsMap -derived class, then loading it into the opengl object.

Otherwise, the following API exists for accurate reconstruction of the sensor path in SE(3) over time:

Note that this object has two timestamp fields:

The standard CObservation::timestamp field in the base class, which should contain the accurate satellite-based UTC timestamp, and

the field CObservationVelodyneScan::originalReceivedTimestamp, with the local computer-based timestamp based on the reception of the message in the computer. Both timestamps correspond to the firing of the first laser in the first CObservationVelodyneScan::scan_packets packet.

New in MRPT 1.4.0

See also:

CObservation, mrpt::maps::CPointsMap, mrpt::hwdrivers::CVelodyneScanner

#include <mrpt/obs/CObservationVelodyneScan.h> class CObservationVelodyneScan: public mrpt::obs::CObservation { public: // structs struct PointCloudStorageWrapper; struct TGeneratePointCloudParameters; struct TGeneratePointCloudSE3Results; struct TPointCloud; struct TVelodynePositionPacket; struct TVelodyneRawPacket; struct laser_return_t; struct raw_block_t; // fields static const int SIZE_BLOCK = 100; static const int RAW_SCAN_SIZE = 3; static const int SCANS_PER_BLOCK = 32; static const int BLOCK_DATA_SIZE =(SCANS_PER_BLOCK* RAW_SCAN_SIZE); static const uint16_t ROTATION_MAX_UNITS = 36000; static constexpr float ROTATION_RESOLUTION = 0.01f; static constexpr float DISTANCE_MAX = 130.0f; static constexpr float DISTANCE_RESOLUTION = 0.002f; static const uint16_t UPPER_BANK = 0xeeff; static const uint16_t LOWER_BANK = 0xddff; static const int SCANS_PER_FIRING = 16; static const int PACKET_SIZE = 1206; static const int POS_PACKET_SIZE = 512; static const int BLOCKS_PER_PACKET = 12; static const int PACKET_STATUS_SIZE = 4; static const int SCANS_PER_PACKET =(SCANS_PER_BLOCK* BLOCKS_PER_PACKET); static const uint8_t RETMODE_STRONGEST = 0x37; static const uint8_t RETMODE_LAST = 0x38; static const uint8_t RETMODE_DUAL = 0x39; double minRange {1.0}; double maxRange {130.0}; mrpt::poses::CPose3D sensorPose; std::vector<TVelodyneRawPacket> scan_packets; mrpt::obs::VelodyneCalibration calibration; mrpt::system::TTimeStamp originalReceivedTimestamp {INVALID_TIMESTAMP}; bool has_satellite_timestamp {false}; TPointCloud point_cloud; // methods virtual mrpt::system::TTimeStamp getOriginalReceivedTimeStamp() const; void generatePointCloud(const TGeneratePointCloudParameters& params = TGeneratePointCloudParameters()); void generatePointCloud( PointCloudStorageWrapper& dest, const TGeneratePointCloudParameters& params = TGeneratePointCloudParameters() ); void generatePointCloudAlongSE3Trajectory( const mrpt::poses::CPose3DInterpolator& vehicle_path, std::vector<mrpt::math::TPointXYZIu8>& out_points, TGeneratePointCloudSE3Results& results_stats, const TGeneratePointCloudParameters& params = TGeneratePointCloudParameters() ); virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const; virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose); virtual void getDescriptionAsText(std::ostream& o) const; };

Inherited Members

public: // fields mrpt::system::TTimeStamp timestamp {mrpt::system::now()}; std::string sensorLabel; // methods mrpt::system::TTimeStamp getTimeStamp() const; virtual mrpt::system::TTimeStamp getOriginalReceivedTimeStamp() const; void load() const; virtual void unload() const; virtual bool exportTxtSupported() const; virtual std::string exportTxtHeader() const; virtual std::string exportTxtDataRow() const; template <class METRICMAP> bool insertObservationInto( METRICMAP& theMap, const std::optional<const mrpt::poses::CPose3D>& robotPose = std::nullopt ) const; virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const = 0; void getSensorPose(mrpt::math::TPose3D& out_sensorPose) const; mrpt::math::TPose3D sensorPose() const; virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose) = 0; void setSensorPose(const mrpt::math::TPose3D& newSensorPose); virtual void getDescriptionAsText(std::ostream& o) const; virtual std::string asString() const;

Fields

static const uint16_t ROTATION_MAX_UNITS = 36000

hundredths of degrees

static constexpr float ROTATION_RESOLUTION = 0.01f

degrees

static constexpr float DISTANCE_MAX = 130.0f

meters

static constexpr float DISTANCE_RESOLUTION = 0.002f

meters

static const uint16_t UPPER_BANK = 0xeeff

Blocks 0-31.

static const uint16_t LOWER_BANK = 0xddff

Blocks 32-63.

double minRange {1.0}

The maximum range allowed by the device, in meters (e.g.

100m). Stored here by the driver while capturing based on the sensor model.

mrpt::poses::CPose3D sensorPose

The 6D pose of the sensor on the robot/vehicle frame of reference.

std::vector<TVelodyneRawPacket> scan_packets

The main content of this object: raw data packet from the LIDAR.

See also:

mrpt::obs::VelodyneCalibration calibration

The calibration data for the LIDAR device.

See mrpt::hwdrivers::CVelodyneScanner and mrpt::obs::VelodyneCalibration for details.

mrpt::system::TTimeStamp originalReceivedTimestamp {INVALID_TIMESTAMP}

The local computer-based timestamp based on the reception of the message in the computer.

See also:

has_satellite_timestamp, CObservation::timestamp in the base class, which should contain the accurate satellite-based UTC timestamp.

bool has_satellite_timestamp {false}

If true, CObservation::timestamp has been generated from accurate satellite clock.

Otherwise, no GPS data is available and timestamps are based on the local computer clock.

TPointCloud point_cloud

Optionally, raw data can be converted into a 3D point cloud (local coordinates wrt the sensor, not the vehicle) with intensity (graylevel) information.

See axes convention in mrpt::obs::CObservationVelodyneScan

See also:

Methods

virtual mrpt::system::TTimeStamp getOriginalReceivedTimeStamp() const

By default, returns CObservation::timestamp but in sensors capable of satellite (or otherwise) accurate UTC timing of readings, this contains the computer-based timestamp of reception, which may be slightly different than timestamp.

See also:

void generatePointCloud(const TGeneratePointCloudParameters& params = TGeneratePointCloudParameters())

Generates the point cloud into the point cloud data fields in CObservationVelodyneScan::point_cloud where it is stored in local coordinates wrt the sensor (neither the vehicle nor the world).

So, this method does not take into account the possible motion of the sensor through the world as it collects LIDAR scans. For high dynamics, see the more costly API generatePointCloudAlongSE3Trajectory() Points with ranges out of [minRange,maxRange] are discarded; as well, other filters are available in params.

See also:

generatePointCloudAlongSE3Trajectory(), TGeneratePointCloudParameters

void generatePointCloudAlongSE3Trajectory( const mrpt::poses::CPose3DInterpolator& vehicle_path, std::vector<mrpt::math::TPointXYZIu8>& out_points, TGeneratePointCloudSE3Results& results_stats, const TGeneratePointCloudParameters& params = TGeneratePointCloudParameters() )

An alternative to generatePointCloud() for cases where the motion of the sensor as it grabs one scan (360 deg horiz FOV) cannot be ignored.

Parameters:

vehicle_path |

Timestamped sequence of known poses for the VEHICLE. Recall that the sensor has a relative pose wrt the vehicle according to CObservationVelodyneScan::getSensorPose() & CObservationVelodyneScan::setSensorPose() |

out_points |

The generated points, in the same coordinates frame than vehicle_path. Points are APPENDED to the list, so prevous contents are kept. |

results_stats |

Stats |

params |

Filtering and other parameters |

See also:

generatePointCloud(), TGeneratePointCloudParameters

virtual void getSensorPose(mrpt::poses::CPose3D& out_sensorPose) const

A general method to retrieve the sensor pose on the robot.

Note that most sensors will return a full (6D) CPose3D, but see the derived classes for more details or special cases.

See also:

virtual void setSensorPose(const mrpt::poses::CPose3D& newSensorPose)

A general method to change the sensor pose on the robot.

Note that most sensors will use the full (6D) CPose3D, but see the derived classes for more details or special cases.

See also:

virtual void getDescriptionAsText(std::ostream& o) const

Build a detailed, multi-line textual description of the observation contents and dump it to the output stream.

If overried by derived classes, call base CObservation::getDescriptionAsText() first to show common information.

This is the text that appears in RawLogViewer when selecting an object in the dataset